Rewarding reviewers – sense or sensibility? A Wiley study explained

Abstract

In July 2015, Wiley surveyed over 170,000 researchers in order to explore peer reviewing experience; attitudes towards recognition and reward for reviewers; and training requirements. The survey received 2,982 usable responses (a response rate of 1.7%). Respondents from all markets indicated similar levels of review activity. However, analysis of reviewer and corresponding author data suggests that US researchers in fact bear a disproportionate burden of review, while Chinese authors publish twice as much as they review. Results show that while reviewers choose to review in order to give back to the community, there is more perceived benefit in interacting with the community of a top-ranking journal than a low-ranking one. The majority of peer review training received by respondents has come either in the form of journal guidelines or informally as advice from supervisors or colleagues. Seventy-seven per cent show an interest in receiving further reviewer training. Reviewers strongly believe that reviewing is inadequately acknowledged at present and should carry more weight in their institutions' evaluation process. Respondents value recognition initiatives related to receiving feedback from the journal over monetary rewards and payment in kind. Questions raised include how to evenly expand the reviewer pool, provide training throughout the researcher career arc, and deliver consistent evaluation and recognition for reviewers.

Key points

- US researchers bear a disproportionate burden of peer review.

- Most reviewers would welcome further training support.

- Twenty-two million researcher hours is spent reviewing for the top 12 producing publishers.

- There is a need to increase the reviewer pool especially in high-growth and emergingmarkets and among early career researchers.

- Journal rank is important to potential reviewers.

- Industry-wide agreement on core competencies may be facilitate the reward and recognition of revie

- Feedback fromjournals is a vital formof recognition for reviewers.

INTRODUCTION

Recent peer review scandals [such as the acceptance of fake papers generated by SCIgen (Seife, 2015) and the retraction of papers in Springer and Biomed Central journals (Retraction Watch, 2015) due to reviews from fake reviewers] make it more important than ever to safeguard the integrity of the science we publish, and the reputation of our peer review standards. In order to deliver the best peer review experience for our authors, and deliver the best quality peer review, publishers need to continue to evolve the support and services we offer our peer reviewers. That is why, in July 2015, Wiley surveyed over 170,000 researchers in order to explore peer reviewing experience, attitudes towards recognition and reward for reviewers, and training requirements; 2,982 usable responses (an effective response rate of 1.7%) were received.

Wiley's research of author needs has repeatedly shown that author's experience of peer review shapes their overall publishing experience. Authors that express the most satisfaction with their publishing experience are those that state they experienced a smooth, problem-free review process. Conversely, authors expressing the lowest levels of satisfaction are those who experience a difficult review process and struggle to communicate with the reviewers of their paper. Furthermore, our editors tell us that recruiting reviewers is a major pain point. One managing editor of a number of Wiley journals reports that the conversion rate of reviewer invitations to acceptances has dropped by at least 5% over the past 5 years. Once reviewers have been recruited, editors are keen to find ways to better reward those who they trust, who deliver on time, and who produce high quality work.

- a need to increase the reviewer pool;

- a need to ensure reviewers in that pool are well trained, trustworthy, and produce good quality reviews; and

- a need to find ways to reward reviewers in order to recognise their work and maintain motivation.

Other large-scale studies (Sense About Science's comprehensive study of 2009, the Publishing Research Consortium report of 2008) are now several years old. The aim of this survey was to take a current temperature check of reviewer experience; a deeper dive into the recognition and training needs of reviewers; and also look more closely at how reviewing behaviour and motivations change according to experience, career stage, and region.

METHODOLOGY

Survey invitations were sent by email in July 2015 to 170,414 authors from two distinct lists: (1) Wiley authors who had published in any Wiley journals since 2012 and who opted to receive e-marketing messages; (2) a random sample of 4950 authors who published in any journal that received a 2014 Impact Factor from Thomson Reuters. It was not possible to send the survey to Wiley's database of reviewers because of data protection issues (reviewers are not asked to opt in to receive e-marketing messages). As studies suggest that 90% of authors are also reviewers (Ware, 2008), it was felt that using author lists would be the most reliable means for reaching large numbers of reviewers. A screening question was used to ensure that all respondents had reviewed a paper in the past 3 years.

The invitation and survey were delivered in both English and Mandarin. The Mandarin option was provided as previous experience at Wiley has shown that response rates from Chinese respondents are greatly improved if the survey and covering email are delivered in a native language. Non-respondents were sent an email reminder. 3,630 logged into the Web-based survey from the email campaigns. A total of 648 logged surveys were excluded from the analysis (209 did not answer the first question; 387 did not review an article in the past 3 years; and 52 were unsure if they had reviewed in the last 3 years), leaving 2,982 usable responses, an effective response rate of 1.7%.

Of the usable responses, 81% of respondents provided country information. For the remaining respondents, country information was deduced by their IP address. Countries were classified into regions using Wiley's internal classification of market designations (Emerging: Nigeria, Malaysia, South Africa, Turkey, Mexico, Chile; High Growth: China, India, Brazil; and Mature: USA, UK, Germany, Australia, South Korea, Japan).

- Country representation: Authors residing in the USA comprised nearly 14% of total responses, followed by China (11%), Italy (8%), Spain (6%), and the UK (5%).

- Market representation: Authors residing in emerging markets comprised 5% of total responses, high-growth markets (20%), and mature markets (28%).

- Discipline representation: Authors representing the life sciences comprised 30% of total responses, followed by health sciences (27%), physical sciences (26%), and social sciences and humanities (17%).

- Primary place of work: Nearly 60% of respondents listed university or college as their primary place of work, followed by research institution (19%), and hospital/healthcare institution (10%).

- Age of respondent: The majority of survey respondents (63%) were between 31 and 50 years of age.

Although respondents to the survey are proportionately representative of the regional and subject-based distribution of Wiley's publishing community, the study may be subject to self-selection bias. Respondents who chose to participate may not represent the entire target population of all reviewers. As responses may not be representative of the target population, inferential statistical tests (viz. hypothesis testing) were not used in this report, instead focusing on highlighting major differences and trends in the data. This report does not examine the biases that may be present in the wording, formatting, and ordering of survey questions.

FINDINGS AND DISCUSSION

Reviewer motivation

A considerable amount of researcher time is spent reviewing journal articles. Rubriq (2013) calculated that approximately 30.5 million researcher hours was spent on reviewing. Taking just the top 12 publishers alone, it is estimated that at least 22 million researcher hours was spent reviewing papers in 2013 (Table 1).

| In 2013 | No. | Calculation notes | Sources |

|---|---|---|---|

| No. of articles published | 973,121 | Articles published by top 12 publishers with greatest market share of published articles in 2013 | Web of Science (www.webofknowledge.com) |

| No. of articles submitted | 2,432,803 | Average of 40% of all submitted articles are published. Therefore, 973,121/40 * 100 = 2,432,803 | Rubriq, 2013; Ware & Mabe, 2012 |

| No. of reviews | 4,420,403 (on both published and rejected articles) | 79% of all submitted articles undergo review (regardless of ultimate publication status). Therefore, 2,432,803/100 * 79 = 1,921,914 | Rubriq, 2013; Ware, 2008 |

| Average of 2.3 reviews per article. Therefore, 1,921,914 * 2.3 = 4,420,403 | Rubriq, 2013; Ware & Mabe, 2012 | ||

| No. of review hours | 22,102,015 | Average of five hours per review. Therefore, 4,420,403 * 5 = 22,102,015 | Rubriq, 2013 |

What are the motivations for spending such a large amount of time reviewing? The results from our survey confirm the findings from other industry reports that reviewers choose to review because it allows them to actively participate in the research community and they feel it is important to reciprocate the peer review that they themselves receive.

However, there are some underlying differences by career stage. Reviewing as a path to improving one's writing skills, along with reputation building and career progression, is ranked higher by those who have been reviewing for fewer years, and therefore more likely to be in their early career (Table 2).

| Reason for becoming a reviewer | Mean importance by number of years reviewing | All responses | |||||

|---|---|---|---|---|---|---|---|

| <1 | 1–2 | 3–5 | 6–10 | 11–15 | 15+ | ||

| I enjoy seeing work ahead of publication | 3.6 | 3.6 | 3.6 | 3.5 | 3.5 | 3.4 | 3.5 |

| Peer reviewing helps to improve my own writing skills | 4.1 | 3.9 | 3.8 | 3.6 | 3.4 | 3.1 | 3.7 |

| Peer reviewing allows me to be an active participant in my research community | 4.3 | 4.3 | 4.2 | 4.2 | 4.1 | 4.1 | 4.2 |

| Reviewing helps to develop my personal reputation and career progression | 3.9 | 3.8 | 3.6 | 3.5 | 3.3 | 3.0 | 3.5 |

| It is expected that researchers undertake peer review | 3.7 | 3.8 | 3.7 | 3.8 | 3.8 | 4.0 | 3.8 |

| I was recommended to become a peer reviewer by my PI/supervisor | 2.9 | 2.8 | 2.6 | 2.5 | 2.3 | 2.1 | 2.5 |

| It is important to reciprocate the peer review that other members of my community undertake for my own work | 3.8 | 3.8 | 3.8 | 4.0 | 4.0 | 4.0 | 3.9 |

| Peer reviewing helps to build relationships with particular journals and journal editors | 3.9 | 3.7 | 3.5 | 3.4 | 3.4 | 3.3 | 3.5 |

| It increases the likelihood of my future papers being accepted | 2.9 | 2.8 | 2.7 | 2.6 | 2.5 | 2.2 | 2.6 |

| I gain professional recognition or credit from reviewing | 3.6 | 3.4 | 3.4 | 3.3 | 3.1 | 2.9 | 3.3 |

The top factor influencing the decision to accept a specific review invitation is the prestige and reputation of the journal, holding true across all age groups (Table 3). Also, both early career researchers and established researchers consider the prestige and reputation of the journal as the most influential factor on the time spent on that review, and their commitment to meeting review deadlines. The personal relationship and opportunity to network with the editor is ranked second for both groups.

| Factors affecting influence | Mean ranking (all responses) | ||

|---|---|---|---|

| Accepting invite | Time spent | Meeting deadline | |

| Prestige and reputation of the journal | 1.5 | 1.5 | 1.6 |

| Personal relationship/networking opportunity with the requesting editor | 3.0 | 2.8 | 2.4 |

| Reviewer acknowledgement in the journal | 3.4 | 3.3 | 3.5 |

| Feedback provided by the journal post-review | 3.6 | 3.5 | 3.7 |

| Reviewer benefits/rewards offered by the journal | 3.7 | 4.0 | 3.8 |

| CME/CPD credit/accreditation awarded for review activity | 4.8 | 4.9 | 4.9 |

| Reviewer credit awarded on 3rd party website | 5.2 | 5.3 | 5.3 |

- a This was a rank order question. The mean indicates the average ranking each item received.

This suggests that while reviewers choose to review in order to give back to the community, there is also more perceived benefit in interacting with the community of a top-ranking journal than a low-ranking one. Clearly, there are some reputation management and career progression factors involved here.

Who is bearing the reviewing burden?

Our survey indicates that 49% of reviewers currently review for five or more journals. Experienced reviewers, those with more than 5 years of reviewing experience, shoulder even more of the burden with 61% reviewing for five or more journals. There is some ambiguity in the responses here because of the phrasing of the question (‘How many journals do you currently review for?’). As the word ‘currently’ was not defined, reviewers may have answered according to either how many journals they are currently completing a review for or the journals on whose reviewer lists they currently sit. Either way, there is sufficient indication that at least half of respondents are undertaking, or could be asked at any time to undertake, a considerable volume of reviews.

In the survey, respondents from emerging and high-growth markets indicate that they are currently acting as reviewers for approximately the same number of journals as authors in mature markets (Table 4). In other words, they are ‘on the books’ for the same number (or more, in the case of China) journals as their US and Western European counterparts.

| Journals | Results shown by market | |||

|---|---|---|---|---|

| Emerging (%) | High growth (%) | Mature (m2) (%) | Rest of the world (%) | |

| 0 | 3 | 5 | 4 | 4 |

| 1 | 8 | 11 | 9 | 8 |

| 2 | 13 | 14 | 10 | 12 |

| 3 | 17 | 18 | 14 | 14 |

| 4 | 9 | 10 | 11 | 11 |

| 5 | 10 | 8 | 12 | 12 |

| 6–10 | 25 | 22 | 26 | 25 |

| >10 | 14 | 11 | 13 | 15 |

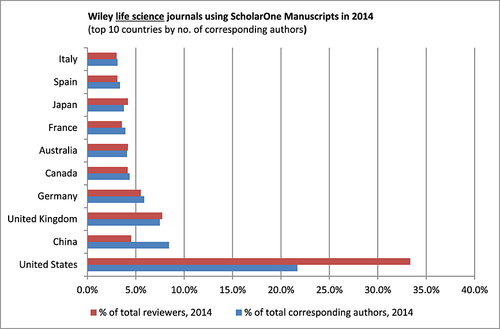

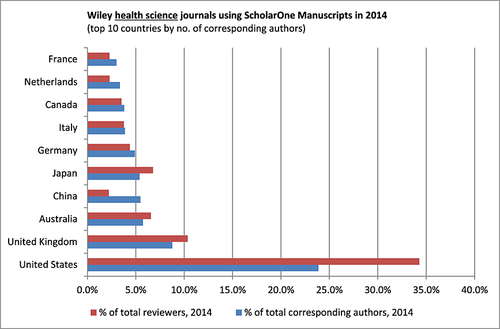

However, comparing the geographical distribution of reviewers undertaking a review, versus authors publishing articles in 2014, gives a different insight into reviewer workload. We looked at the top 10 countries (in terms of number of contributing authors) for Wiley health and life science journals using ScholarOne. This revealed that US researchers are reviewing 33% and 34% of the papers; in contrast, they are publishing 22% and 24% of papers. Chinese researchers, on the other hand, are publishing twice as many papers than they are reviewing (Figs 1 and 2). It has been suggested that a fair ‘reviewer commons’ is one in which researchers review the same number of papers as they themselves receive review for (Coin, 2015).

As editors frequently invite past authors to be reviewers, it is assumed that as the number of authors grows, so should the number of reviewers. This apparent regional imbalance could be one explanation for the increasing difficulty in finding reviewers, and also the burden of a growing number of articles without a commensurate growth in reviewers.

Anecdotally, some editorial offices observe that Chinese researchers have one of the highest review invitation acceptance rates. As with researchers across the full pool, Chinese researchers rate being an active participant in the research community as the most influential factor in choosing to become a reviewer with mean rating of 4.3. Perhaps unsurprisingly, improving their own writing skills (3.8) and gaining professional recognition (3.8) receive higher mean rankings from Chinese researchers than the overall mean across all regions.

This apparent uneven geographical spread raises some interesting questions. It is possible that more emphasis is being placed on the importance of authoring over reviewing in some high-growth markets such as China and within emerging market institutions. But, when asked, high-growth and emerging market reviewers demonstrate an appreciation of the value of reviewing activity, for the benefit both of the research community and personal development.

So, why is there an apparent imbalance in reviewing versus authoring effort? Is there an issue here around editorial trust and confidence in the reviewing standards of emerging and high-growth market researchers? Is it more difficult for editors from international journals to find referees from, for example, China, because they do not know where to look? Or are there generally fewer experienced/skilled reviewers in these areas? Could training support help attract more reviewers from these areas to the pool, provide evidence of reviewing skill, and possibly help alleviate the pressure elsewhere?

What type of training do reviewers receive?

It has frequently been asserted that there is a lack of guidance about how to perform a good review, and reviewers are expected to ‘learn on the job’ (Sense About Science, 2009). This is borne out in the responses to our survey about the types of training that reviewers have previously received.

The most common type of peer review training received by all respondents to date has come either in the form of guidelines, for example a journal's instructions for reviewers (32%), or Committee on Publication Ethics (COPE)'s ethical guidelines (18%), or informally as advice from supervisors (19%) or colleagues (16%). This also holds true across all regions. Fewer survey respondents indicate that they have experienced more formal training, participated in a journal's reviewer mentoring programme (4%), attended a workshop/seminar on the topic (4%), or watched a video or webinar (3% and 2%) (Table 5).

| Training received | Responses by number of years reviewing (%) | All responses (%) | |||||

|---|---|---|---|---|---|---|---|

| <1 | 1–2 | 3–5 | 6–10 | 11–15 | 15+ | ||

| Guidance from my PI/supervisor | 25 | 22 | 20 | 17 | 15 | 14 | 19 |

| Participation in a journal-level reviewer mentoring scheme (across multiple journals) | 2 | 4 | 5 | 4 | 2 | 4 | 4 |

| Physical workshops/seminars on how to review | 7 | 5 | 4 | 4 | 3 | 3 | 4 |

| Live webinars on how to review | 2 | 2 | 2 | 2 | 1 | 1 | 2 |

| Videos on how to review | 2 | 4 | 3 | 3 | 2 | 2 | 3 |

| Reading of general review ethics guidelines (e.g. COPE) | 14 | 17 | 18 | 19 | 20 | 17 | 18 |

| Reading of journal-level guidelines for reviewers | 30 | 29 | 30 | 33 | 34 | 37 | 32 |

| Informal counselling from peer network | 16 | 14 | 15 | 15 | 17 | 17 | 16 |

| Other | 2 | 2 | 2 | 3 | 5 | 6 | 3 |

When asked what type of training reviewers would find most useful, journal and publisher guidelines continue to be ranked highest overall as useful resources for reviewers. However, there are some differences in the rankings between early and late career respondents. Early career respondents rate guidance and mentoring as important, while late career respondents rank general ethics guidelines for peer reviewers as more important.

What training needs do reviewers have?

How confident are reviewers in their reviewing skills? On average, reviewers self-assess their skills at 3.7 out of a possible highest rating of 5. Not surprisingly, those with less reviewing experience rate themselves less skilled than those with more experience, and those who have reviewed fewer than 10 papers have an average mean score of 3.4 compared with 4.2 for those reviewing over 100 papers to date. Reviewers in Asia have the lowest average mean score of 3.4 compared with US reviewers who have the highest average of 4.0, although this is perhaps more indicative of cultural differences and lower baseline self-assessment levels than an accurate reflection of reviewing skill.

Despite expressing relatively high levels of confidence, 77% of reviewers express an interest in receiving further training. As we would expect, demand is particularly strong (89%) among respondents with 5 or less years of reviewing experience. However, established career researchers also express an interest in training (75% of those with 6–10 years of reviewing experience and 64% of those with 11–15 years of experience) (Table 6). Notably, it appears to be the fundamentals of reviewing such as constructing a review report and providing constructive, useful feedback that consistently elicit the highest interest across all experience levels (Table 7).

| Responses by number of years reviewing (%) | All responses (%) | ||||||

|---|---|---|---|---|---|---|---|

| <1 | 1–2 | 3–5 | 6–10 | 11–15 | 15+ | ||

| No | 6 | 8 | 14 | 25 | 36 | 50 | 23 |

| Yes | 94 | 92 | 86 | 75 | 64 | 50 | 77 |

| Responses by number of years reviewing (%) | All responses (%) | ||||||

|---|---|---|---|---|---|---|---|

| <1 | 1–2 | 3–5 | 6–10 | 11–15 | 15+ | ||

| Introduction to becoming a peer reviewer | 14 | 11 | 9 | 7 | 6 | 7 | 9 |

| Handling conflicts of interest | 4 | 3 | 3 | 4 | 5 | 5 | 4 |

| Handling plagiarism issues | 8 | 9 | 9 | 11 | 11 | 11 | 10 |

| Constructing a review report | 11 | 12 | 11 | 12 | 10 | 12 | 11 |

| Providing constructive, useful feedback | 8 | 10 | 10 | 12 | 13 | 13 | 11 |

| Working with editors during the review process | 7 | 7 | 8 | 9 | 9 | 9 | 8 |

| How to review a qualitative research article | 9 | 9 | 8 | 8 | 8 | 6 | 8 |

| Reviewing a quantitative research article | 5 | 6 | 6 | 6 | 6 | 5 | 6 |

| Performing a statistical review | 8 | 8 | 8 | 7 | 7 | 7 | 7 |

| Reviewing a clinical paper | 5 | 5 | 5 | 5 | 3 | 4 | 5 |

| Reviewing a systematic literature review paper | 6 | 7 | 7 | 7 | 7 | 7 | 7 |

| Reviewing data | 6 | 6 | 6 | 5 | 5 | 4 | 6 |

| Handling re-reviews | 4 | 4 | 5 | 5 | 5 | 7 | 5 |

| Understanding/checking against reporting standards guidelines | 4 | 4 | 4 | 3 | 3 | 3 | 3 |

Responses show some variation between disciplines. There is higher demand for training in how to review a qualitative research article in the social sciences and humanities, and greater demand for training in performing a statistical review, reviewing a systematic literature review, reviewing data, and handling re-reviews in the health and life sciences. In addition, there is specific interest in how to review a clinical paper from health science respondents.

There are also some regional differences. Asian reviewers express much higher demand for an introduction to becoming a peer reviewer, working with editors, and reviewing a qualitative research paper than Western counterparts.

Recognition for reviewing

Reviewers strongly believe that reviewing is inadequately acknowledged at present and should carry more weight in their institutions' evaluation process. Moreover, respondents say they would spend more time reviewing if their institution recognised this task (Table 8). Some work was undertaken in this area in 2012 when Sense About Science's Voice of Young Science Network lobbied for the Higher Education Funding Council for England to recognise peer reviewing in the UK Research Excellence Framework (Sense About Science, 2012), and in 2015, a group of Australian Wiley editors, led by Associate Professor Martha Macintyre, published an open letter to the Australian Research Council (Meadows, 2015), requesting for greater acknowledgement of the importance of editing and assessing research within the Excellence in Research in Australia assessment system.

| Mean response | |

|---|---|

| Reviewing should be acknowledged as a measurable research output by research assessment bodies/my institution | 4.2 |

| I would spend more time reviewing if it was recognised as a measurable research activity by research assessment bodies/my institution | 4.0 |

| Reviewing is not sufficiently acknowledged as a valuable research activity by research assessment bodies/my institution | 3.9 |

But putting institutional recognition aside, what type of recognition do reviewers want from their journals and publishers?

Survey respondents were shown a list of reward and recognition initiatives and asked to select the ones that would make them more likely to accept an invitation to review. Individual initiatives were grouped into categories – acknowledgements, accreditations, rewards, performance-based rewards, and feedback. Three of the top six most selected individual initiatives are related to receiving feedback from the journal – on the quality of their review, learning about the decision outcome, and seeing other reviewer comments (Table 9).

Next to this, the second most valued category of reward is acknowledgement, whether in the printed journal, on the journal website, or a personal note from the editor. Respondents are definitely more interested in receiving feedback and editor/journal ‘thank you's’ or recognition for their reviewing efforts, than cash or in-kind payments, although receiving access to journal content also featured highly (Table 9).

| Reward and recognition initiative | Votes (% all responses) |

|---|---|

| Acknowledgements | |

| Acknowledgement in the journal | 6 |

| Acknowledgement on the journal's website | 5 |

| A personal thank you note from the editor | 5 |

| Name being published alongside the paper as one of the reviewers | 3 |

| Signed report being publicised with the paper | 1 |

| Accreditations | |

| A certificate from the journal to acknowledge review effort | 7 |

| Credit automatically awarded on a 3rd party site | 3 |

| CME Accreditation/CPD points | 2 |

| Rewards | |

| Personal access to journal content | 6 |

| Discount/waiver on Open Access fees | 4 |

| Access to papers which I have reviewed, if accepted and published | 4 |

| Discount/waiver on colour or other publication charges | 4 |

| Cash payment by the journal | 3 |

| Reviewer web badges that you could include on your LinkedIn site/online resume, etc. | 3 |

| Book discount | 3 |

| Payment in-kind by the journal | 2 |

| Payment or credits by independent/portable peer review services | 1 |

| Performance-based rewards | |

| Reviewer of the year awards from the journal | 5 |

| “Top reviewer” badges that you could include on your LinkedIn site/online resume, etc. | 4 |

| Feedback | |

| Feedback from the journal on the usefulness/quality of your review | 8 |

| Information from the journal on the decision outcome of the paper that you reviewed | 8 |

| Visibility of other reviewers comments/reviewer reports | 6 |

| Metrics related to your review history | 3 |

| Post-publication metrics related to the articles you have reviewed | 3 |

In many ways, this is consistent with what we have learned about reviewer motivation. As covered earlier, reviewers are motivated by their desire to actively participate in their research community - they want to know that their contribution has been well received and was worth the precious time they spent.

The four most preferred reward and recognition initiatives hold true across all markets. However, responses from reviewers in high-growth markets indicate that acknowledgements in the journal or on its website are less important than receiving access to papers they have reviewed, or a digital ‘Top reviewer’ badge that could be displayed on personal and social media websites. Reviewers in mature markets show a higher preference for discounts or waivers on Open Access fees.

FUTURE DEVELOPMENTS AND CONCLUSIONS

Returning to the three primary statements outlined at the beginning of this article, we have the following:

A Need To Increase The Reviewer Pool

In order to reduce reviewer workload issues, there is a need to increase the pool by attracting early career researchers and new markets, including reviewers from high-growth and emerging regions. However, the findings with regard to the apparent uneven geographical spread of reviewing burden suggest that there is also a need to make sure that the work is spread out evenly.

There may well be process improvements that publishers could make to better identify and record possible reviewers. Increasing use of customer insight tools, either those developed in-house or by vendors such as DataSalon (http://www.datasalon.com) or SalesForce (www.salesforce.com), may offer opportunities to more easily identify potential reviewers.

Formal recognition from research assessment bodies of review activity as a measurable research output [facilitated by a taxonomy of contributor roles, such as project CreDit (http://casrai.org/CRediT), and unique identifiers for reviewers, i.e. ORCID (http://orcid.org/)] could help alleviate some of the time allocation issues, as reviewing would be seen as part of researchers' activity rather than an additional commitment.

With specific reference to Chinese reviewers, further research is needed to assess the extent to which the imbalance is due to any or all of the following factors: a lack of recognition for reviewing activity, which may be causing less Chinese researchers to undertake reviews; a skills deficit, which may mean that Chinese reviewers are less confident at reviewing for international journals; a possible reluctance of international journal editors to use Chinese reviewers (due to either real or perceived skills gap); and/or difficulties in identifying potential reviewers from China.

Our findings suggested that journal rank plays an important role in the decision of researchers to accept a review invitation. This could imply a tiered reviewer market, where researchers are more willing to review for higher impact journals. If this is indeed the case, lower-ranking journals may need to work harder or employ different tactics to attract and motivate reviewers, perhaps in the areas of reviewer mentoring or reward. However, further analysis is required to fully determine whether lower-impact journals find it more difficult than high-impact journals to recruit the requisite number of reviewers or encourage reviewers to deliver reviews on time and to the highest standard.

A need to ensure reviewers in that pool are well trained, trustworthy, and produce good quality reviews

While specific training needs vary across regions, subject, and experience levels, there is evidence from this study and past research (Sense About Science, 2009) showing agreement that better training is needed, both in order to help bring more reviewers into the pool and to make sure that editors trust and are confident in using those reviewers.

While publishers want to develop author services globally and build key partnerships, there are a growing number of publicly funded organizations in emerging markets with an agenda to increase internationalization and visibility of the research they fund. Author, reviewer, and editor training (especially writing well in English) is critical to these institutions' goals around visibility and also serves to strengthen the standing of publicly funded organizations as pivotal research bodies within their regions.

This demand for training both at the individual and institutional level creates opportunities for publishers to provide these services and become valuable partners for research institutions in emerging and high-growth markets. In addition, editing services like Editage or Edanz, and/or third-party reviewer services, such as Rubriq and Peerage of Science, could have a role to play in helping to train reviewers. This survey suggested that few respondents currently have experience with third-party reviewer services with only 10% of respondents, indicating they had provided reviews for Peerage of Science, Academic Karma, Rubriq or Axios Review. However, exploring reviewer receptivity and business models around training from these operators could be another useful area for future research. The findings of this survey suggest that training support for reviewers is needed throughout the researcher career arc, not just for those new to reviewing.

A need to find ways to reward reviewers in order to recognise their work and maintain motivation

In many ways, training and recognition/reward issues are different sides of the same coin. The drivers for more effective reward and recognition initiatives are a combination of the need to adequately compensate reviewers for the effort and time they take, a desire to keep reviewers motivated, and also a need to reward the best reviewer attributes and behaviours in order to maintain ongoing quality of peer review standards.

However, the question remains, what are reviewers being rewarded for? Simply completing a review or completing a review well? This is an area that this study did not explore in detail and that needs further follow-up. Most journals monitor quantitative aspects of reviewer performance: punctuality in delivering a report, review invitation acceptance rate, number of review reports delivered, and so on. This is relatively easy to do as the numbers are readily available and are indisputable. However, measuring quality or usefulness of a review is a different matter. A considerable number of Wiley journals have developed frameworks for qualitative assessment of reviewer performance, but reliably scaling up this approach to the programme level is a challenge, relying on consistent execution of assessment standards from multiple editors across multiple journals.

This survey suggested that the most valued recognition initiatives are as much about improving editorial workflows, for example by telling reviewers how useful their review was, and sharing decision outcomes, as they are about receiving more formal compensation. In this sense, feedback is itself a powerful form of reward. Many journals share decision outcomes and other reviews with their reviewers, but how much more powerful would it be if we could harness this form of evaluation and apply the same consistency in feedback as reviewers are asked to supply in the quality of their comments?

Core competencies – a possible solution

Publishers need a consistent answer to the question, what makes a good reviewer? Studies have tried to quantify the characteristics of a good reviewer (experience, proven review frequency, etc.), but these studies are primarily based on quantitative factors (Black, van Rooyen, Godlee, Smith, & Evans, 1998). Alternatives, such as looking at attributes based on the profile of current reviewers, could just serve to reinforce existing distribution of reviewing effort.

One possible solution is the concept of core competencies as advocated by Moher (2014) and others (Glasziou et al., 2014). If we want to train reviewers effectively, and also measure their performance, perhaps there is a need to establish an industry-wide set of minimum core reviewer competencies. A set of universally agreed reviewer competencies, with some variation at subject level, could provide the basis for both a training framework and ongoing measurement and evaluation of reviewer quality.

Earlier in this article, it was asked whether there is a lack of trust in the reviewing ability of emerging and high-growth market researchers. A training and recognition mechanism based on core competencies could help alleviate this issue. There is an opportunity for centrally funded reviewer training programmes, delivered by publishers who have the expertise and the content, tailored according to regional needs and designed to deliver learning outcomes based on these competencies. In 2014 alone, over 30 editorials in Wiley journals offered guidance on peer reviewing. These were some of the most highly viewed articles in the year, indicating a strong researcher interest in more information and guidance on reviewing.

Finally, this same competency framework could also be used to provide consistent and meaningful feedback on review activity to more established reviewers. The recently launched Think. Check.Submit campaign (thinkchecksubmit.org) is a good example of what can be achieved by a coalition across the field of scholarly communications. Further collaboration could greatly facilitate progress on this issue also.

ACKNOWLEDGEMENTS

Thanks go to Catherine Giffi, Wiley, and Jill Yablonski, Wiley, for their assistance in the data collection and analysis.